Are you interested in safe and trustworthy AI?

CSX-AI: Safe and Explainable AI

If you’re convinced of AI’s benefits and potential in your company’s innovation roadmap, CSX-AI can help you differentiate between AI that could suit you and AI that you can safely trust.

We target systems where deep learning models implement essential functionalities such as perception, guidance, navigation, and control. Typical examples include self-driving cars, unmanned aerial vehicles, satellite control systems, digital biometrics, digital finance, digital healthcare, and conversational AI.

These systems usually run in open and dynamic environments, with human users involved. For open settings, the environment where a system is constructed (or trained) can differ from the environment where it is executed. For example, a perception module of a self-driving car may be trained on traffic data collected in London, while the car may eventually run on Paris roads. For dynamic environments, the environment might change during the system executions. For example, the lighting, traffic, and passenger’ conditions may change when the car runs.

Over such systems, we are concerned about two critical perspectives:

Safety

We expect the systems to operate without failures and have no bad consequences (such as bias, data privacy leakage, etc.). Failures can refer to different properties.

Trustworthiness

We expect human users (developers, regulators, end users, etc.) to be appropriately communicated about the systems’ status and decision-making mechanism so they are willing to rely on AI systems.

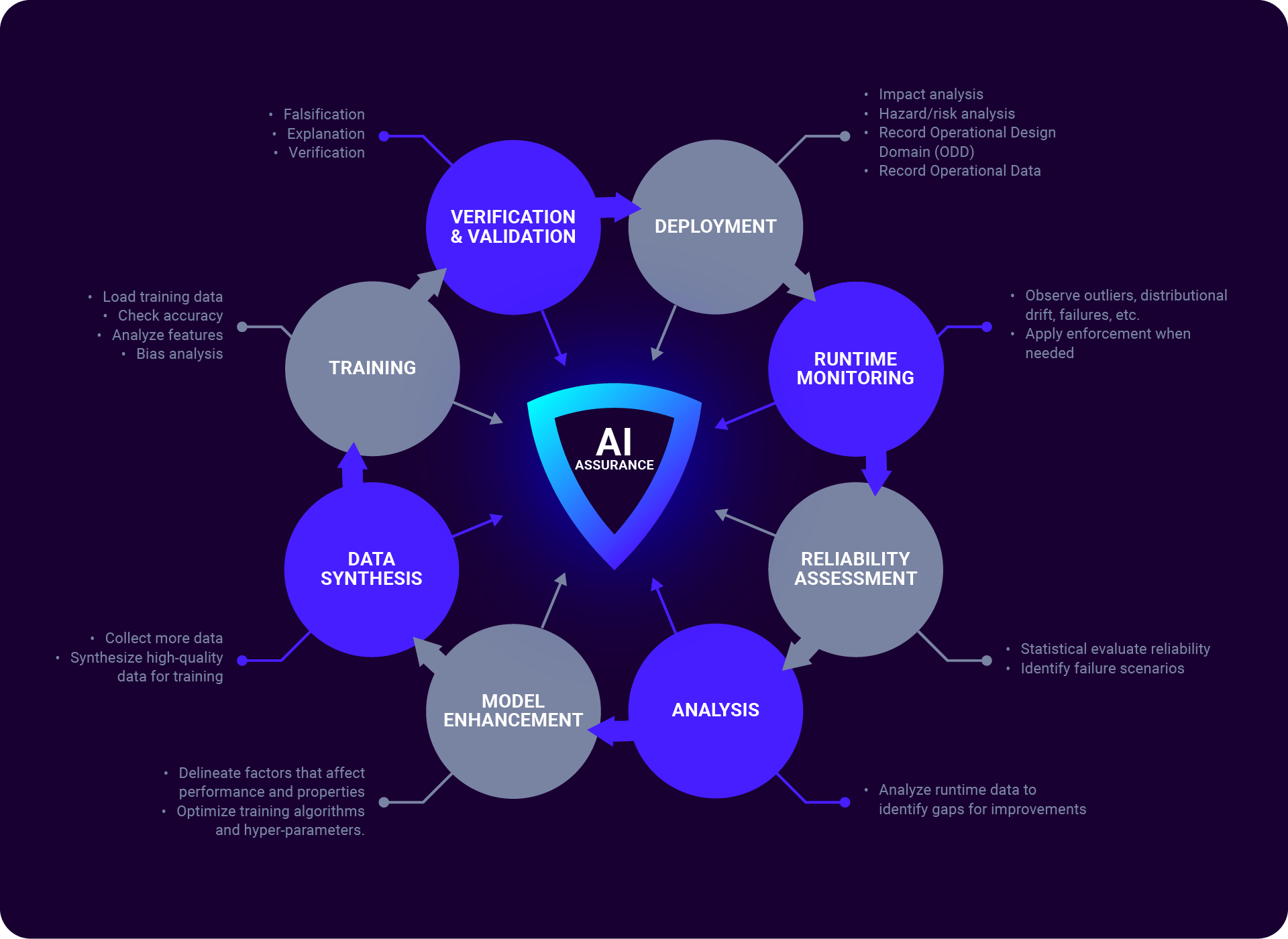

CSX-AI aims to develop through the following three stages for AI components:

- first develop an interpretable backbone for various lifecycle V&V methods including offline analysis and runtime monitoring, and

- second, based on the backbone, develop targeted, more interpretable, and more accurate lifecycle V&V methods (as opposed to the existing methods), focusing on enhancing the ability of runtime monitoring to deal with open and dynamic environments.

- Third, building on the developments in 1 and 2, develops a whole-system approach for AI assurance to enable the ultimate certification of AI systems (from AI components, single learning-enabled system, to system of systems) in a rigorous and practical way.

CSX-AI Lifecycle V&V methods to support AI Assurance